|

I obtained my Ph.D. degree in Computer Science at Hefei University of Technology (HFUT) through a direct M.S.–Ph.D. program from Sep. 2020 to Dec. 2025, advised by Prof. Dan Guo and Prof. Meng Wang. From Aug. 2023 to Aug. 2024, I studied at University of Science and Technology of China (USTC) for one year under the guidance of Prof. Xun Yang. From Sep. 2024 to Aug. 2025, I studied at National University of Singapore (NUS) as a visiting student under the guidance of Dr. Junbin Xiao, Prof. Angela Yao, and Prof. Tat-Seng Chua. I am actively seeking research discussions and collaboration opportunities, so feel free to contact me! My group at KAUST is actively recruiting visiting/remote students, with several openings available until July 2026. Students interested in the MLLM for Healthcare can contact me! |

|

|

My research focuses on visual-language understanding and reasoning, primarily on scene-text visual question answering and visual grounding. I am currently expanding my research scope to egocentric video understanding and multimodal large language models for human assistance. |

|

|

|

|

|

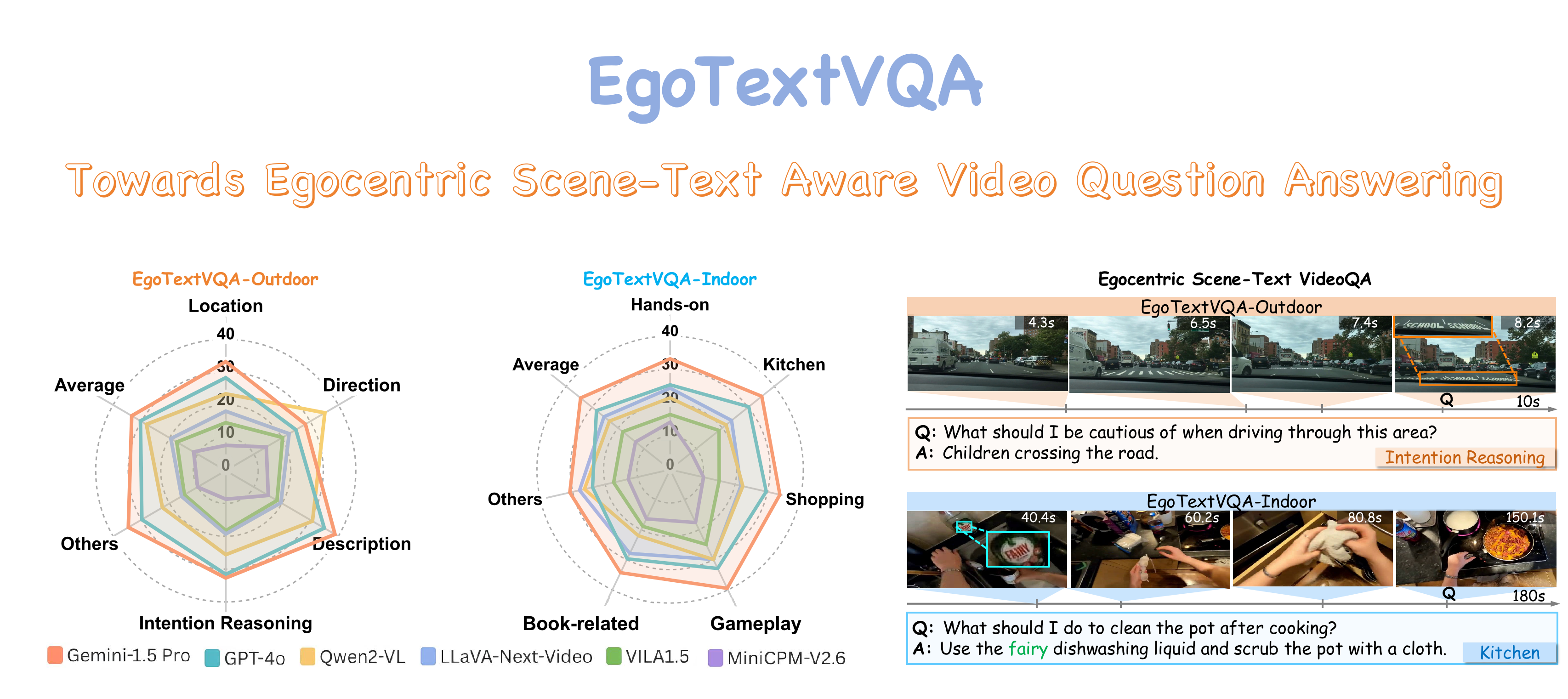

Sheng Zhou, Junbin Xiao, Qingyun Li, Yicong Li, Xun Yang, Dan Guo, Meng Wang, Tat-Seng Chua, Angela Yao. CVPR'25 [arXiv] [Project Page] [Code] [Dataset] |

|

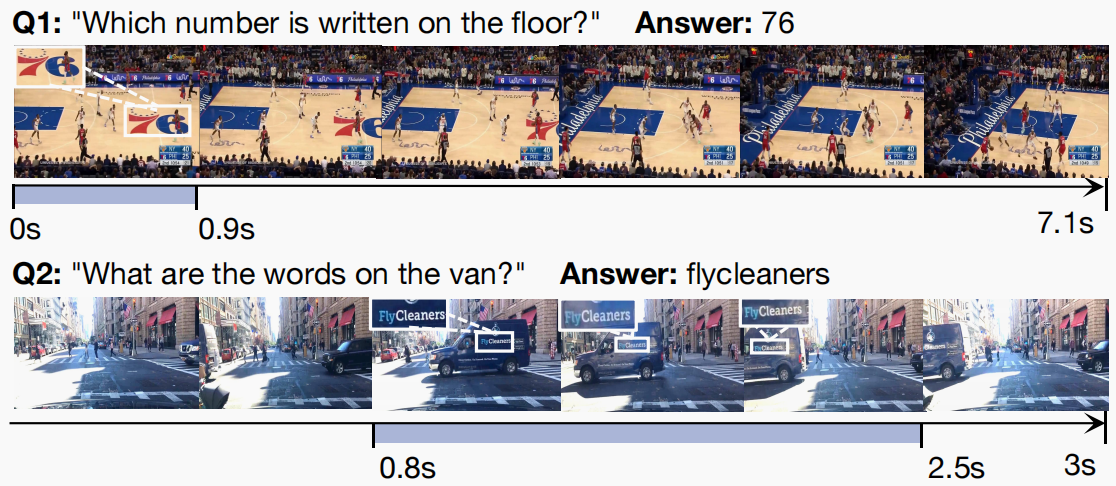

Sheng Zhou, Junbin Xiao, Xun Yang, Peipei Song, Dan Guo, Angela Yao, Meng Wang, Tat-Seng Chua. TMM'25 [arXiv] [Code] [Dataset] |

|

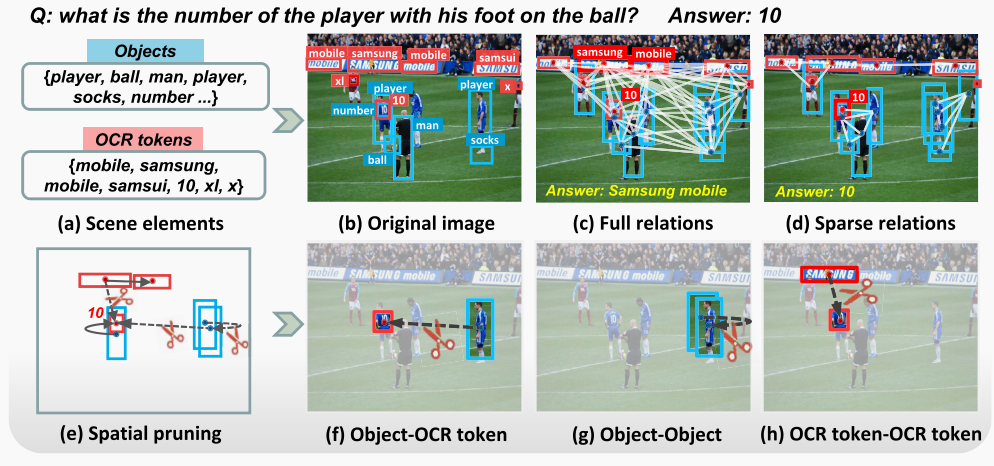

Sheng Zhou, Dan Guo, Xun Yang, Jianfeng Dong, Meng Wang. TOMM'24 [Paper] [Code] |

|

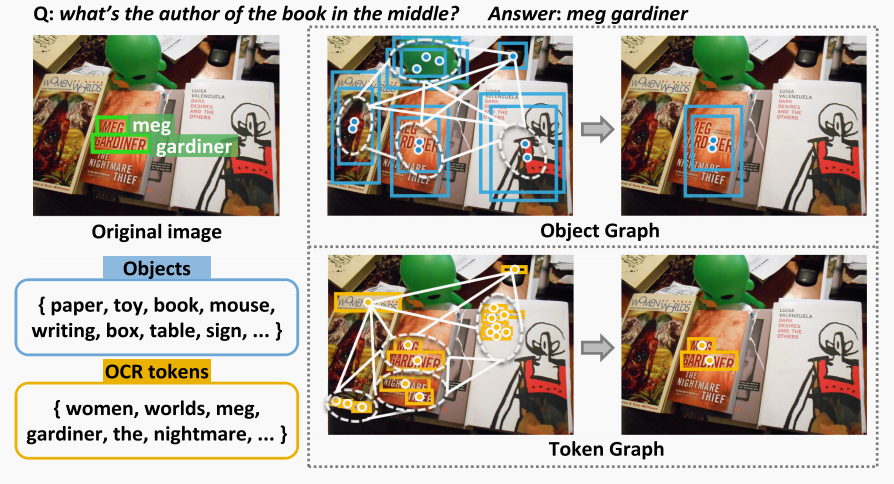

Sheng Zhou, Dan Guo, Jia Li, Xun Yang, Meng Wang. TIP'23 [Paper] [Code] |

|

|